Xiaomi MiMo Large Language Model Launch: How It Achieves a 2.6× Faster Inference Speed

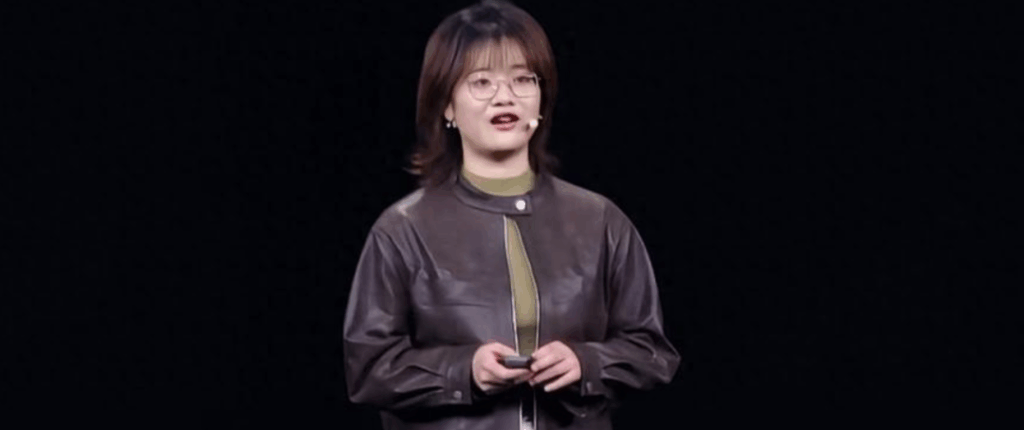

On December 17, 2025, Xiaomi officially unveiled its latest MiMo large language model at its Full Ecosystem Partner Conference, marking another major milestone in the company’s advancement in artificial intelligence. The launch was led by Luo Fuli, head of the MiMo large model project, whose debut appearance since joining Xiaomi drew significant attention. The release of MiMo-V2-Flash highlights Xiaomi’s deep technical expertise in inference efficiency and model architecture design.

At the event, Luo Fuli provided a detailed introduction to the core design philosophy behind MiMo-V2-Flash. The model is built around extreme inference efficiency and features a newly developed three-layer MTP (Multi-Token Processing) inference acceleration architecture. This innovative design enables parallel token verification, resulting in a 2.0× to 2.6× improvement in inference speed. Such performance gains allow MiMo-V2-Flash to support a wider range of use cases, particularly real-time applications that require fast response times.

Xiaomi’s continued investment and innovation in artificial intelligence reflect its strong insight into future technology trends. As AI technology continues to mature, more companies are placing greater emphasis on balancing inference efficiency with model performance. MiMo-V2-Flash stands as a compelling example of this trend in practice.

For more information, feel free to reach out to us!

info@bishopmi.com | info@bishopeco.com

Bishop, the largest Official and Authorized Xiaomi Ecosystem Products and Mobiles Distributors in Hongkong and Shenzhen with ready stock more than 350 models.

All products NO MOQ ! All series products of brand like: Xiaomi, Realme, Oneplus, Lenovo, Amazfit, Udfine, Haylou, QCY, Imilab, Mibro, 70mai, JBL, Sony, Nintendo, Muzen, etc.

Thank you for your support and trust!